Few areas in modern computing are as poorly understood as artificial intelligence (AI). Researched for decades, AI is still considered an elusive subject – partly due to its complex and nebulous nature.

The term "artificial intelligence" was first coined by American computer scientist John McCarthy in 1956 during a workshop at Dartmouth College in Hanover, U.S.

Since then, research on AI has progressed in fits and starts and attracted the attention of only a niche few in academia and government.

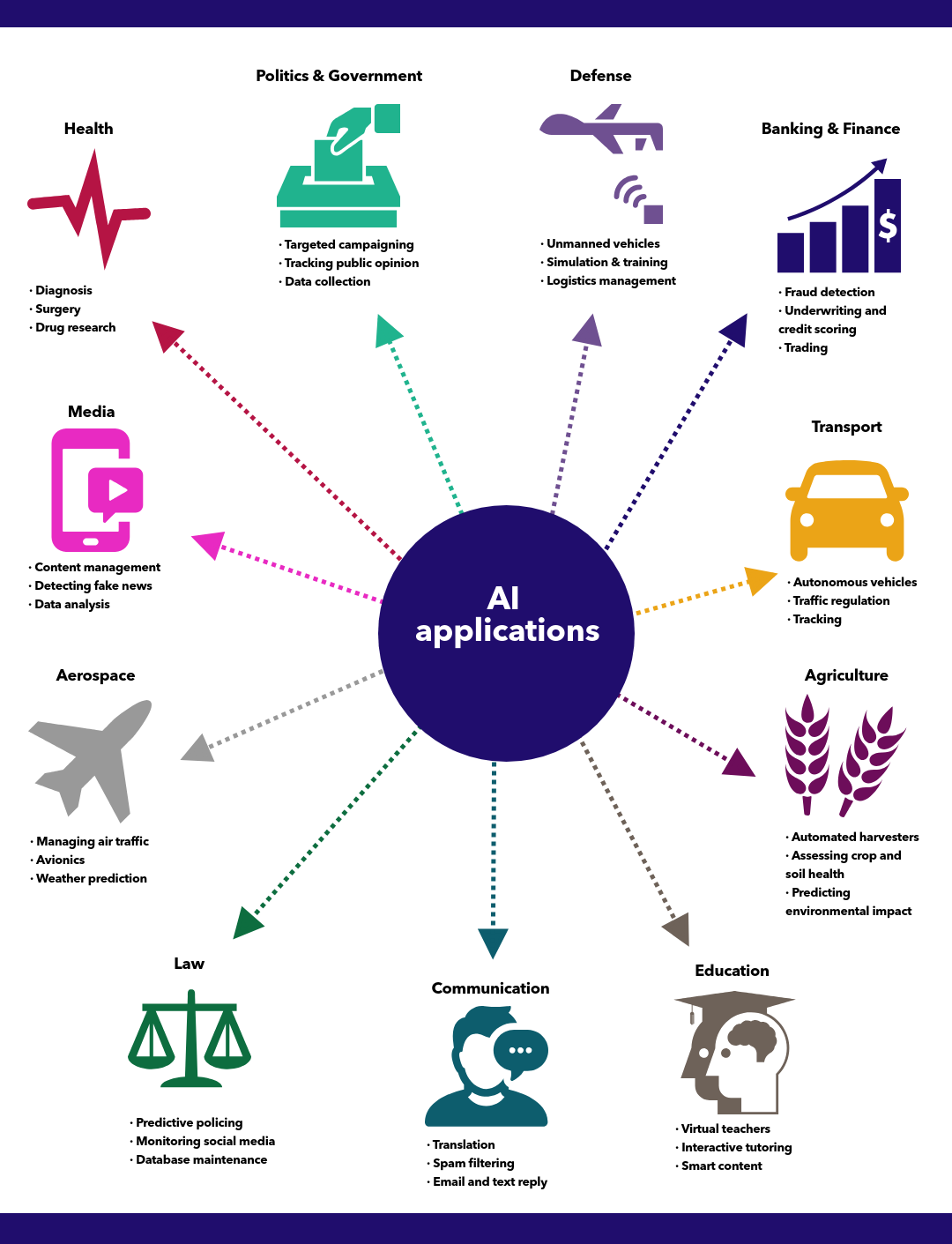

Only recently has this ever-expanding technology made a significant impact on society – from healthcare and education to business, transport and defense, AI is being used to develop cutting-edge applications.

Klaus Schwab, founder and Executive Chairman of the World Economic Forum (WEF) credited AI with helping usher in a Fourth Industrial Revolution and “disrupting almost every industry in every country.”

Others have erred on the side of caution. Renowned physicist Stephen Hawking warned that, “artificial intelligence could spell the end of the human race.” While tech entrepreneur Elon Musk cautioned: “With artificial intelligence, we are summoning the demon.”

What is AI?

Such observations prompt the questions: What is AI? How does it work? Is AI a threat to our way of life or a boon?

There are no easy answers, which brings us back to the lack of a singular definition of artificial intelligence.

In simple terms, AI is the simulation of human intelligence by computers. It relates to the ability to learn, rationalize and undertake appropriate actions to achieve a specific goal.

While Hollywood depicts tales of AI going rogue, the current crop of technologies is not that scary – or even that smart.

AI today involves mundane processes such as machine learning, cognitive analytics and other nascent capabilities.

AI can be categorized as either narrow or general. Narrow, also known as "weak" AI, involves performing a single job and usually operates within set parameters. Weak AI constitutes machine intelligence that surrounds us today. Typical examples would be Google Assistant, Google Translate and Siri.

Artificial General Intelligence (AGI) or "strong" AI is the future. AGI refers to systems that exhibit human ingenuity and find solutions to unfamiliar tasks without outside intervention. It would be able to reason, make judgments, plan, learn and be innovative.

CGTN Infographic

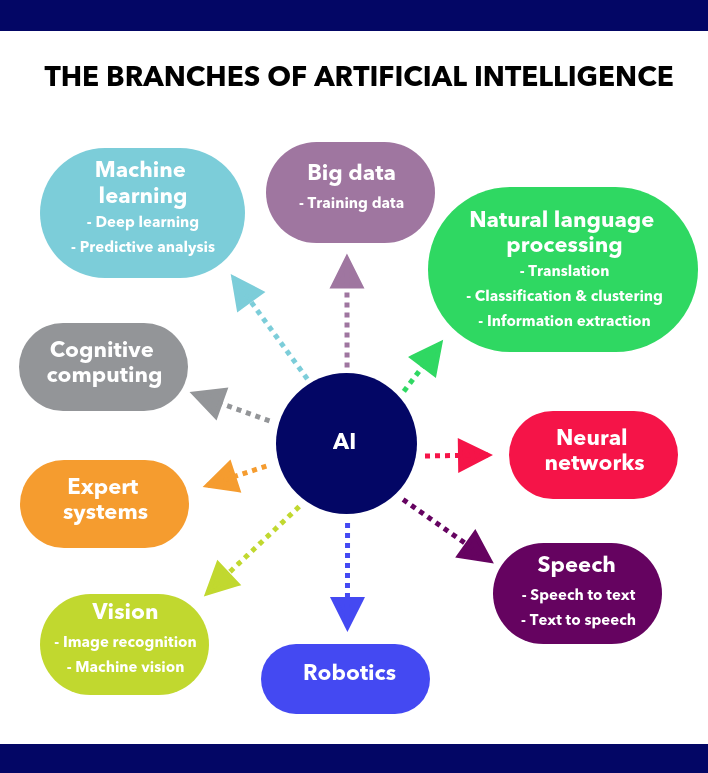

Like most evolving concepts, AI is littered with jargon and sub-fields. Here are a few:

- Machine learning: The process by which a computer uses big data sets to perform tasks. Machine learning and AI aren’t quite the same but do share similarities. A machine learning algorithm can identify predefined patterns but lacks the acumen to think and improvise.

- Neural networks: An important tool in machine learning that identifies data which are far too complex for humans to grasp. Inspired by biological neural networks, it is a framework for different algorithms to work together and process intricate inputs.

- Deep learning: The consequence of neural networks learning from large chunks of data by performing a job repeatedly, each time tweaking it slightly for a better outcome.

- Natural language processing: The branch of AI that gives computers the ability to process, analyze and manipulate human communication, both speech and text. NLP is best represented by devices such as Alexa and Siri.

- Cognitive computing: A cognitive system mimics the human brain and helps improve decision-making by extracting information from unstructured data. Self-learning algorithms, pattern recognition and natural language processing are key elements of cognitive analysis.

- Computer vision: The field of AI that relies on pattern recognition and deep learning to interpret the visual world. It helps computers to analyze images, videos and multi-dimensional data in real time.

Is AI beneficial?

As intelligent machines continue to advance in capability and scope, AI has the potential to provide spectacular benefits for both industry and the masses.

In the business and consumer space, AI offers enhanced automation, real-time assistance, predictive analysis and precise data mining capabilities.

Some key advantages of AI include 24/7 availability, error reduction, better speed and accuracy, daily application and the ability to undertake tedious and hazardous jobs.

It has already made its mark in several fields, such as robotics, driverless cars, smart weather forecasting, disaster response, the Internet of Things (IoT), medicine, retail and finance.

CGTN Infographic

The International Data Corporation (IDC), a marketing intelligence firm, expects global spending on AI to hit 79.2 billion U.S. dollars by 2022, more than double the 35.8 billion U.S. dollars forecasted for 2019. According to IDC, the sectors experiencing the fastest growth in AI investments are federal/central governments, customer services, education and healthcare.

U.S. tech giants have been some of the biggest backers of artificial intelligence, with Google, Amazon, Facebook, Microsoft and Apple pouring large amounts of money into AI products and services. China’s Baidu, Alibaba, Tencent and Huawei are showing a similar appetite for AI and are, on some occasions, providing greater funding than their Silicon Valley counterparts.

Will AI hurt us?

Experts have divergent views on whether AI could be a threat. The increased use of intelligent machines for daily activities will eventually trigger concerns about employment, privacy, the manipulation of society, discrimination and so called “fake news.”

The ethics of deploying weaponized AI for warfare has also been a topic of intense debate. The specter of autonomous drones and robots making “kill” decisions has galvanized arms control advocates, human rights activists and technologists to push for a global ban on such platforms.

While the dangers posed by AI are a global talking point, it is important to note that in its present forms it is bound by algorithms developed by humans. For now, the technology is restricted to the role of a facilitator, bereft of any capacity to execute truly independent actions.

(Top image via VCG)

Copyright © 2018 CGTN. Beijing ICP prepared NO.16065310-3

Copyright © 2018 CGTN. Beijing ICP prepared NO.16065310-3