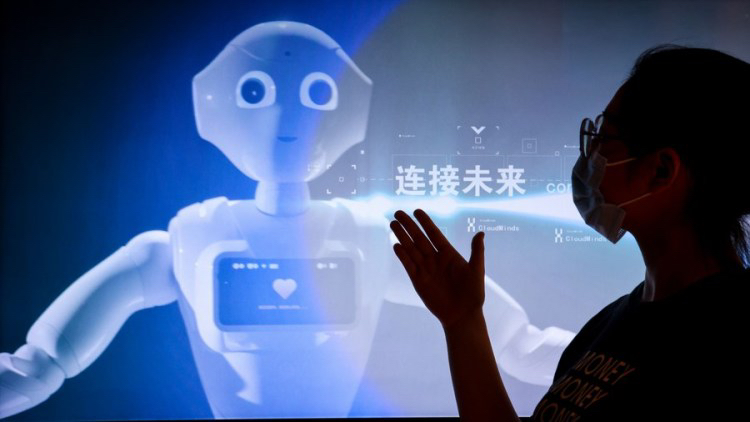

A staff member introduces a promotion video of 5G AI technology at the exhibition center of the national big data (Guizhou) comprehensive pilot zone in southwest China's Guizhou Province, May 26, 2022. /Xinhua

A staff member introduces a promotion video of 5G AI technology at the exhibition center of the national big data (Guizhou) comprehensive pilot zone in southwest China's Guizhou Province, May 26, 2022. /Xinhua

Editor's note: Belunn Se is a senior industry observer based in Shenzhen, China. The article reflects the author's opinions and not necessarily the views of CGTN.

The Generic Pre-trained Transformer (GPT) in ChatGPT is a deep learning model launched by OpenAI, which was founded in 2015. ChatGPT has attracted widespread attention since its debut. This kind of generative AI has achieved content creation, and its capabilities in some fields even have exceeded the general level of human beings. ChatGPT can conduct reasoning, code writing, content creation, etc. on the Question & Answer mode. Such unique advantages and special user experience have greatly broadened its application scenarios; the number of monthly active users reached 100 million by January 2023, making it the fastest growing internet application in history.

Artificial Intelligence generated content (AIGC) is leading a comprehensive ecosystem racing on: Big Data + Algorithm + Computing Power.

Server and chip: Core computing power for AI infrastructure

The AI Supercomputing Center or Giga Data Center is the infrastructure of AI computing power, and server is the key carrier for AI computing involving general CPU, and AI chips of GPU, FPGA, ASIC (Application Specific Integrated Circuit, may specially referred to AI Chip if in narrow definition) and other chips with different architectures.

Although ASIC, GPUs, CPUs+FPGAs and other chips have formed the underlying computing power support for the existing models. To meet the potential soaring computing power demand in the future, through the use of CHIPLET heterogeneous technology could accelerate the implementation of various application algorithms in the short term; while in the long term to build an integrated storage and computing chip (reduce the data transferring inside and outside the chip), may become a potential orientation for computing power upgrading in the future.

Meanwhile, the core infrastructure of ChatGPT is mainly the Azuer AI supercomputing platform built by Microsoft with an investment of $1 billion, including 285,000 CPUs and 10,000 GPUs. Its computing power is equivalent to the level of top five supercomputers in the world. The ChatGPT's training computing power is about 3600Pflops per day. According to the computing power of domestic data centers, it needs 7-8 data centers to support such operations, and the infrastructure investment should be at around tens of billions of Yuan.

From high precision floating point computing capacity, the performance between domestic GPU and a foreign leading brand could be lagging. In terms of software and ecology, the gap with Nvidia CUDA is much more obvious.

But in the long run, the U.S. ban on the sale of high-end GPUs in China has brought opportunities for rapid development to domestic GPGPU and AI chip manufacturers, in particular, those independent and innovative AI Chip design companies that obtains the full self-development of architecture, computing core, instruction set and fundamental software, such as Huawei, Cambrian, etc.. facilitating the commercialization and domestic substitution of AI Chips.

Algorithm: Deep learning framework and large model parameter contest

The realization of AI includes two critical loops: Training and Inference.

'Training' is aimed to train a complex neural network model through big data that is, using a large number of marked or unmarked data to 'train' the corresponding model, so that it can complete specific functions. Training requires high computing performance and high accuracy.

'Inference' is projected to the use of the above trained models to infer various conclusions using new data. That is to use the existing neural network model for calculation, and use new input data to complete certain specific applications, for example 'Chat' and content pushing for video-streaming.

The AI-Chemist system performs a chemical experiment at a laboratory in the University of Science and Technology of China in Hefei, east China's Anhui Province, Oct. 21, 2022. /Xinhua

The AI-Chemist system performs a chemical experiment at a laboratory in the University of Science and Technology of China in Hefei, east China's Anhui Province, Oct. 21, 2022. /Xinhua

Since 2018, the parameters and indicators of domestic and foreign large-scale pre-training models have reached new highs, and the 'large model' has become a direction for industry giants to make greater efforts. Google, Microsoft, Baidu and other domestic and foreign technology giants have invested much manpower and capital resources to launch their own large models.

The number of GPT-2 parameters released in February 2019 was 1.5 billion, while the number of GPT-3 parameters in May 2020 reached 175 billion. In January 2021, the Switch Transformer model launched by Google broke the dominance of GPT-3 as the largest AI model with up to 1.6 trillion parameters and became the first trillion-level language model in history.

The deep learning framework is the underlying development tool of AI algorithms and the operating system in the era of AI. The development trend of the deep learning framework is towards large model training, which puts forward requirements for the distributed training ability of the deep learning framework. Domestic deep learning frameworks such as Huawei's Mindspore and Baidu's PaddlePaddle could usher in more commercialization opportunities.

Big-data training: Microsoft ignited the large model arms race with the first-mover advantage

The algorithms of ChatGPT, including autoregressive language model and PPO algorithm of reinforcement learning strategy, which are all very mature public algorithms, without many secrets. Nonetheless, this kind of large-scale training does not depend on the algorithm itself only, but also on Hardware Computing and Big Data sources. Pure natural language online public data is also available, and this won't threshold as well. But ChatGPT has a huge first-mover advantage, that is, it has collected a large number of user's interactive application data by starting the public beta first. This is more valuable data, and is only available to OpenAI. As long as ChatGPT is still the best language AI, the snowball will only get bigger and harder to catch up with.

In addition, in order to prevent ChatGPT from generating harmful information, OpenAI also spent large resources to mark many harmful texts to train the model not to output harmful information. This part of data is the barrier that OpenAI has built for several years.

Moreover, according to relevant estimates, the cost of training a large language model (LLM) to GPT-3 level is as high as $4.6 million. For ChatGPT, at least tens of thousands of Nvidia GPU A100 are required to support its computing infrastructure, and the cost of a model training is more than $12 million.

In short, small companies can't afford such projects like ChatGPT at all. In China, only BAT (Baidu, Alibaba,Tencent) kind of Internet company with an independent cloud computing platform and a large loads of user data, can chase ChatGPT.

Head technology companies including Google and Baidu will master ChatGPT technology sooner or later. The inference cost of ChatGPT is high, and the computing power consumed is directly proportional to the user experience. However, the user viscosity of search engine is low, and users prefer to use the best one, so this may compel related technology players to engage in the game pushing up the cost of search algorithms.

With ChatGPT, Microsoft has launched an "arms race" in the large model search engine leaving Google in a dilemma. Of course, this is not "rat race," after all, the user experience will be benefited ultimately.

(If you want to contribute and have specific expertise, please contact us at opinions@cgtn.com. Follow @thouse_opinions on Twitter to discover the latest commentaries in the CGTN Opinion Section.)