16:16, 28-Mar-2019

Livestreaming & Violence: Exploring ways to prevent spread of violent online content

Updated

16:10, 31-Mar-2019

03:00

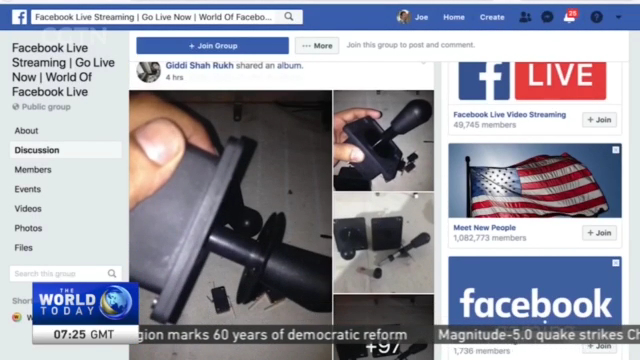

The recent mass shooting at two mosques in New Zealand not only shocked the world, but also highlighted a disturbing reality -- livestreaming technology can be used for evil purposes. CGTN's Mark Niu takes a look at how technology made a tragic situation worse and what can be done to correct it.

A massacre designed to go viral. The New Zealand shooter livestreamed the mass shooting on Facebook for 17 minutes.

Less than 200 viewers watched it live, but the video was viewed about 4,000 times before Facebook removed it.

In the first 24 hours, Facebook removed 300-thousand re-posted videos of the attack after they were viewed, and 1.2 million more before they were viewed.

DEAN TAKAHASHI LEAD WRITER, VENTUREBEAT "It's a wakeup call for them to try to figure out how to prevent more of this in the future. Because people like this see something like that go viral and are inspired to do more of it."

The video was later posted "tens of thousands" of times on Youtube, which told the Washington Post it was dealing with an 'unprecedented volume' of uploads.

Youtube tweeted "Please know we are working vigilantly to remove any violent footage."

AHMED BANAFA CYBER SECURITY EXPERT, SAN JOSE STATE UNIVERSITY "They give some kind of a digital fingerprint on every video or any video that's uploaded. They have it in a database, so if somebody try to upload it later, it's just compare it and if it's bad, there's no way to upload it. That's for video upload. The livestreaming is a challenge. It is a real challenge for people because you have no idea what's gonna happen next."

Banafa suggest live streamers like Facebook should have task forces in multiple geographical areas ready to be activated during crisis.

He says other options include putting delays on livestreams and also flagging videos with a high number of live viewers so the companies can immediately monitor those.

AHMED BANAFA CYBER SECURITY EXPERT, SAN JOSE STATE UNIVERSITY "That's all going to cost them money. The market for the digital marketing is about $103 billion dollars. Thirty three percent belongs to Facebook. We are talking about huge money. They don't want to do that. They don't want to do that because that's going to slow down the revenue. That's the problem here. They are not going to go against their own business model unless there is a regulation. Regulations will force them you have to do this."

In a statement, Facebook said the video did not trigger its artificial-intelligence-based automatic detection because "you need many thousands of examples in order to train a system to detect certain types of text imagery or video" and that "these events are thankfully rare."

MARK NIU CALIFORNIA "Facebook also says while AI is incredibly important in the fight against terrorist content on their platform, it's never going to be perfect. Facebook says it's doubled the number of human content reviewers to about 15,000 people. But they also encourage users to report disturbing content. In the tragic mosque shootings, no one reported the video until twelve minutes after the stream ended. Mark Niu CGTN Mountain View, California."

SITEMAP

Copyright © 2018 CGTN. Beijing ICP prepared NO.16065310-3

Copyright © 2018 CGTN. Beijing ICP prepared NO.16065310-3