Technology

10:17, 29-May-2019

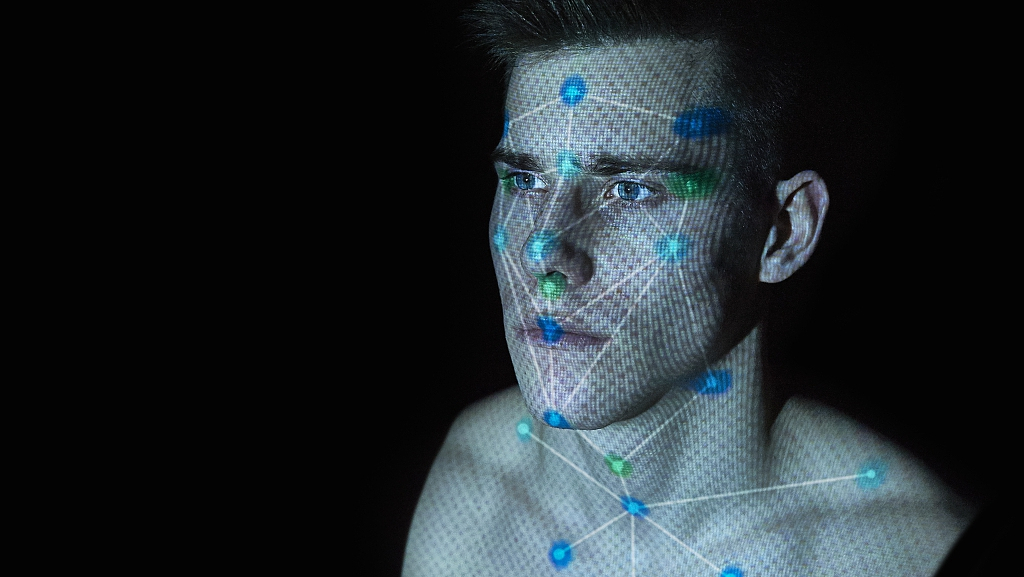

Is my face and all its data the government's property? San Francisco says No

Updated

10:48, 29-May-2019

By Mark Niu

The San Francisco Board of Supervisors recently voted in favor of an ordinance that would ban the use of facial recognition technology by city police and other government departments.

The ordinance heads to the mayor next month for final approval.

While at least six other California cities are contemplating similar measures, San Francisco would become the first major U.S. city to ban facial recognition for use by police and city departments.

That's somewhat ironic given the San Francisco Bay area's image as the tech and innovation capital of the world.

Facial recognition technology is already being used in law enforcement organizations in many countries around the world, such as China, the United Kingdom and Canada.

It's also being used in a number of U.S. police departments, including Las Vegas, Orlando, New York City, Boston and Detroit.

But given the numerous privacy data scandals that have occurred over the past year, San Francisco is being particularly cautious.

"We can have good policing without becoming a police state. And part of that is building trust with the community based on good community information, not big brother technology," said Supervisor Aaron Peskin at a Board of Supervisors meeting.

Peskin is the supervisor who introduced the Stop Secret Surveillance Ordinance that includes that facial recognition ban.

While Peskin's gained near unanimous support on the Board of Supervisors, the ordinance has faced resistance from local police leaders who fear it's a valuable tool that will be taken away.

Anti-Crime group STOP CRIME SF agrees that there should be controls on facial recognition technology but says San Francisco is going too far.

"It can save lives. If someone has dementia and they wander off, then it would be a good way of identifying them," said Frank Noto, President of Stop Crime SF. "If there's a kidnapping, that's another way of identifying them and saving lies. In terms of crime, if you can find someone who is a repeat offender, this is a great way to stop them."

But Don Heider, who's a professor of Social Ethics at Santa Clara University, says facial recognition technology is raises ethical concerns.

The key problem is that there's no "opt out."

That means people who have their facial features captured have absolutely no control how their information is being used or potentially shared.

"I think anytime you see a company or organization sort of trying to not move forward too quickly with a new technology and think about what all the ethical implications are, how it might hurt people, it's always a good thing," said Heider.

Heider also cites a study that found face analysis algorithms were more inaccurate when trying to classify people with darker skin.

The study, conducted by Joy Buolamwini, a researcher in the MIT Media Lab's Civic Media group examined three commercially released facial-analysis programs from major technology companies.

In the researchers' experiments, the three programs' error rates in determining the gender of light-skinned men were never worse than 0.8 percent.

But for darker-skinned women, the error rates rose to more than 20 percent in one case and more than 34 percent in the other two.

"There have a been a number of studies that have showed definitively that facial recognition is not very good for people of color," said Heider. "We could easily imagine a scenario where someone is misidentified and then there's a no knock raid on their home and somebody gets hurt or killed and it may be the wrong person."

One thing ethicists and technologists agree on is that the debate over facial recognition is going to be coming up at more and more city council meetings across the country and the world.

SITEMAP

Copyright © 2018 CGTN. Beijing ICP prepared NO.16065310-3

Copyright © 2018 CGTN. Beijing ICP prepared NO.16065310-3