Questions about social media's role in fighting terrorism have been raised following many recent terror attacks across the world. In response, the world's most popular social network, Facebook, posted two statements on Thursday addressing "hard questions" it is facing, including keeping terrorist content off 1.94 billion people’s Facebook feeds monthly.

"We agree with those who say that social media should not be a place where terrorists have a voice," Facebook's Monika Bickert, Director of Global Policy Management, and Brian Fishman, Counterterrorism Policy Manager, said in one of the statements. "We want to be very clear how seriously we take this — keeping our community safe on Facebook is critical to our mission."

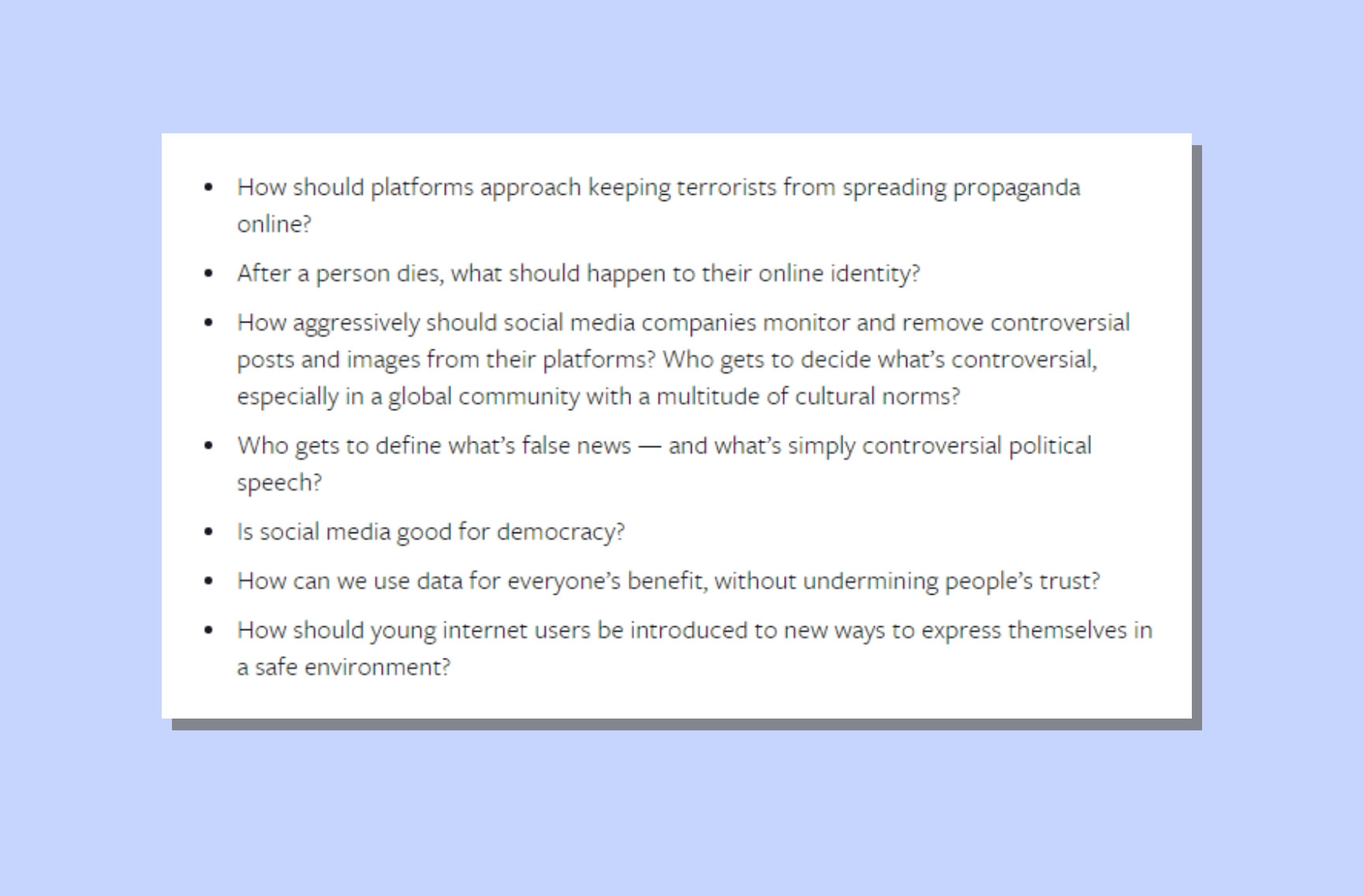

Facebook posts a list of "hard questions" it is facing. /Screenshot from Facebook's Newsroom website

No place for terror

Facebook has a zero tolerance policy when it comes to terrorism content. Any profile, page, or group related to a terrorist organization or pro-terrorism would be shut down.

“We work aggressively to ensure that we do not have terrorists or terror groups using the site, and we also remove any content that praises or supports terrorism,” a Facebook spokesperson told AFP after an anti-terror themed forum in 2015 between French Interior Ministry and tech companies including Apple, Google and Twitter.

Steps taken

Used to rely primarily on user reports, Facebook now aims to find terrorist content before the people see them with help from technology.

The company has included Artificial Intelligence in recognizing ISIL-related content since late 2016. It is now using AI’s image matching ability to prevent previously removed images and videos from uploading for a second time. It is also experiencing AI’s capability of language understanding to eventually detect terrorist propaganda posts. In particular, Facebook wants to develop text-based signals to identify terrorist propaganda. The machine will learn from the algorithm of detecting similar posts on a feedback loop and get better over time.

Besides the Facebook website, the company has also collaborated with its subsidiary companies WhatsApp and Instagram to keep out terrorism-related content from all its apps and websites.

People's efforts are not ignored to combat the issue either. The company still encourage users to report concerns and give suggestions.

"As we proceed, we certainly don’t expect everyone to agree with all the choices we make. We don’t always agree internally. We’re also learning over time, and sometimes we get it wrong. But even when you’re skeptical of our choices, we hope these posts give a better sense of how we approach them — and how seriously we take them. And we believe that by becoming more open and accountable, we should be able to make fewer mistakes, and correct them faster."

Elliot Schrage, Facebook's Vice President for Public Policy and Communications, in one of the statements posted on Facebook's Newsroom website

Steps taken by the competition

Earlier this year, many were arrested in China over spreading terrorism-related rumors on WeChat, Facebook’s competitor, one of the most popular social media platforms in China, with 889 million monthly active users.

Facebook's competitor WeChat is facing the similar "hard questions". /CGTN Photo

WeChat has been performing censorship on the server-side. Messages sent through the app pass through a remote server that contains rules of censorship implements. If the message includes keywords on the censoring list, the entire message will be blocked.