The next time you come across a picture of a ravishing dish on Instagram or WeChat that whets your appetite but you can't exactly make out what it is made of, don't wrack your brain trying to guess the recipe.

An AI system unwrapped earlier this week helps those with culinary curiosity find the right ingredients of an unknown dish and offers step-by-step instructions how to make it just by analyzing a photo they upload online.

Researchers from the Polytechnic University of Catalonia, Massachusetts Institute of Technology (MIT) and Qatar Computing Research Institute have developed a deep-learning algorithm that can whip out a recipe just by "looking" at a photo of the dish.

They fed the neural network one million recipes, along with one million photos of their final outcome, from popular websites like Allrecipes.com and Food.com to create a huge database they dubbed, Recipe1M, accessible through a web portal they called Pic2Recipe.

What's cooking?

With a single click of a button, the website allows users to upload a photo of the mystery dish and then the system, using machine learning, goes through the massive mounds of data to analyze it.

It then predicts a list of possible ingredients along with their relevant recipes, then ranks them based on how certain the AI is they match the image.

Pic2Recipe thought this veggie omelet made by one of our editors was a frittata. /CGTN Photo

The image recognition system achieves this through undergoing training to explore how a photo of a dish matches with what goes into its preparation.

Such association has allowed the AI to successfully retrieve correct recipes of food images 65 percent of the time during test trials.

Despite its announcement cooking up a storm among AI experts and foodies, the algorithm has still a long way to go before perfecting its mechanism.

CGTN Digital put the system to the test, but found that some results leave the taste buds wanting more.

A photograph of homemade apple muffins on a baking pan was correctly identified as featuring muffins, though the system failed to detect the flavor, suggesting – most likely based on the color of the final result – they were chocolate flavored.

The AI similarly mixed up a veggie omelet with frittata, and when presented with images of seven-vegetable couscous and chicken burrito smothered in cheddar cheese it found "no matches".

The researchers behind Pic2Recipe are not sugarcoating the reality and said they are aware of its limited capabilities to parse certain food items that are either too complex or too versatile in the way they are prepared – at least for the time being.

This chicken burrito image resulted in no matches when uploaded on Pic2Recipe. /CGTN Photo

"It still has a lot of difficulty identifying certain food types (…) It makes a big difference if photos are taken from close up or far away, or if the photo is of a single item, multiples, or part of a complete dish," Nick Hynes, an MIT electrical engineering and computer science student and one of the members of the research team, told Wired UK.

Though good news for baking buffs as recipes of cookies and muffins are easily discernible due to their popularity online.

More than a recipe finding app

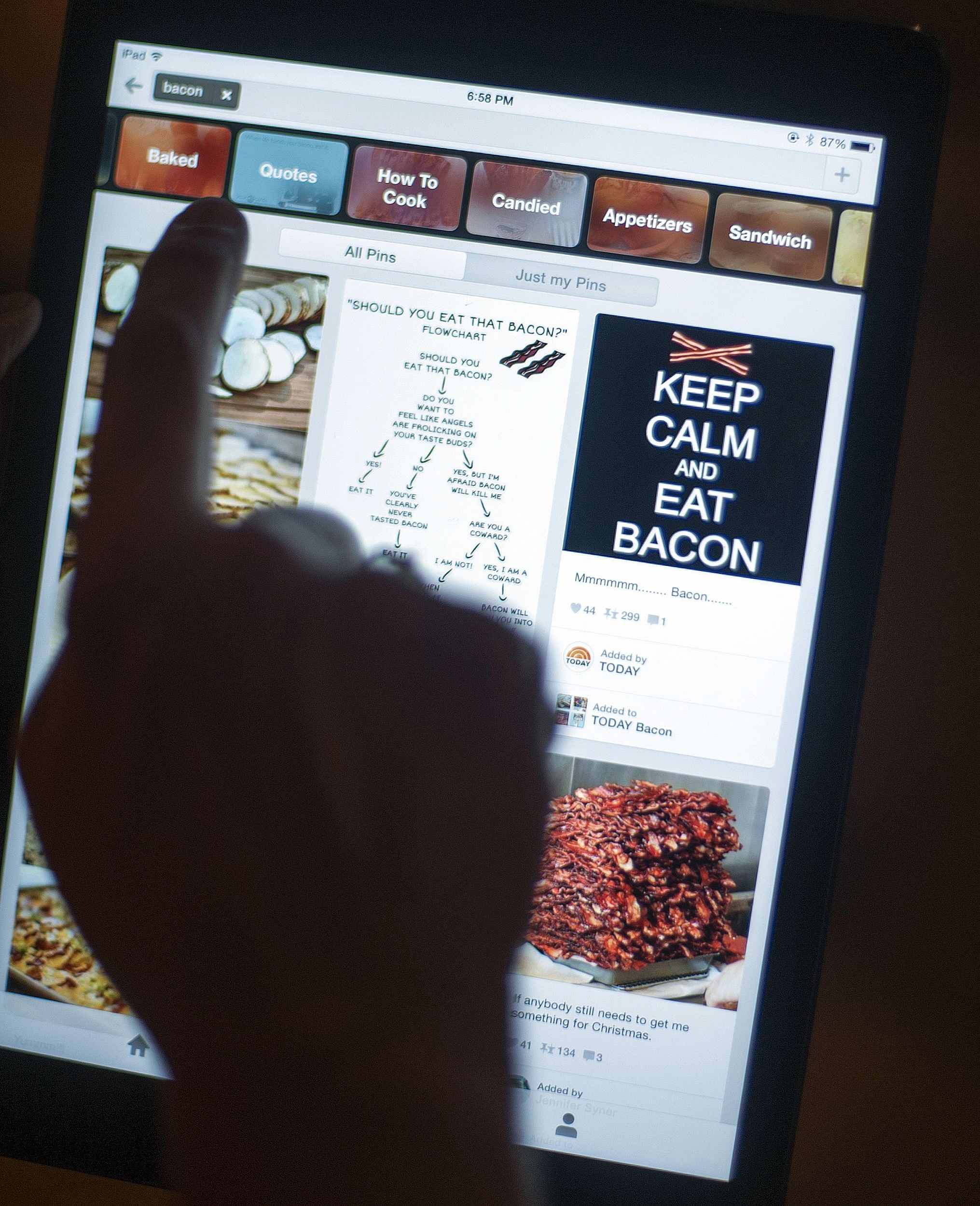

The technology is reminiscent of the dish recognition feature Pinterest introduced back in May, which enables users to find recipes based on meals they take pictures of.

The move was an upgrade to the Lens tool Pinterest rolled out last summer which allows users to search for themes and pins related to the snaps they take on their phone or they upload from their camera roll.

Pinterest introduced in May a dish recognition feature that helps users search for pins and recipes relevant to their uploads. /VCG Photo

But the researchers behind the machine learning system emphasize that Pic2Recipe is more than just a way to fish for recipes.

While plenty could justifiably see it as the cherry on top as they relentlessly try to innovate in the kitchen, Hynes says the interface is just a side dish in their quest to learn deep associations between recipes and images and train the AI to understand how meals are cooked and how to differentiate between food items.

“What we’re really exploring are the latent concepts captured by the model. For instance, has the model discovered the meaning of ‘fried’ and how it relates to ‘steamed?’” he noted.

Their goal is to eventually allow people to improve their dietary habits, and subsequently health, by allowing them to exactly figure out what exactly goes into what they are eating, and determine their meal's nutritional value and calorie count when nutritional information are not available.