12:35, 03-Oct-2018

Robots & Business: Artificial Intelligence influence raises ethical concerns

Updated

11:40, 06-Oct-2018

02:33

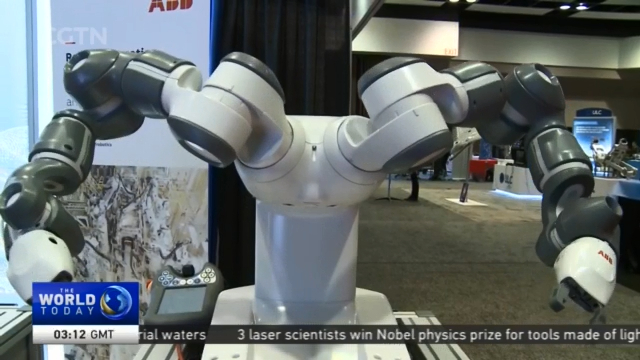

As robots become more common in the workplace, the industry is trying to figure out how to integrate the technologies with business, both safely and ethically. CGTN's Mark Niu has the details.

The robots are coming. Actually, they're already here in greater numbers than ever before. Roboconference in Silicon Valley demonstrates how robots are increasingly finding work in factories and warehouses without taking any breaks.

MARK NIU SAN FRANCISCO "The startup Rover Robotics has created this platform for any company to put robotic applications on top of it for just $4,000. It's technology used by bomb squads and first responders. The hope is to spread it to other verticals like agriculture and warehouses."

Cheaper, faster and smarter. But on a scale of 1 to 10, with 10 being most concerned, where does the head of Santa Clara University's Director of Technology Ethics rank his concern about the future of robotics and artificial intelligence?

BRIAN PATRICK GREEN DIR. OF TECHNOLOGY ETHICS, SANTA CLARA UNIVERSITY "I'm about at a level of 8 or 9. The world already has plenty of problems in it. And AI and robotics and machine learning technologies are going to take the problems we already have and amplify them. Is this dangerous? Is this risky? And the answer is I think clearly yes."

Green's department has developed an ethics curriculum for companies such as Google's Lab for secretive projects, known as X.

Green cites AI and weapon systems as his biggest concerns, saying that he can envision the technology getting away from humans.

BRIAN PATRICK GREEN DIR. OF TECHNOLOGY ETHICS, SANTA CLARA UNIVERSITY "We feel like we want to have control, but at the same time we want to delegate lots of power to the technology. And so based on that, what is the balance going to be between that. And I think if we want to maintain safety of the technology, we need to delegate less rather than more."

When technology gets out of control, that's where guys like this step in, lawyers from a robotics and automation group.

These lawyers have seen everything from accidental plant shutdowns to employees getting hands stuck in autonomous devices. But they say AI opens up a new legal frontier.

BRIAN CLIFFORD, PARTNER FAEGRE BAKER DANIELS LAW FIRM "We normally have some kind of culpability element for those determinations. When there is no human involved, and the YX was chosen over Y, how do you deal with culpability? Is it the programmer of the machine that ultimately led to it even though at some point it becomes its own learning mechanism, or not. But at the end of the day, we are all going to struggle with this."

Clifford says one continuous challenge is that the legal system is always playing catchup to the pace of technology. Mark Niu, CGTN, Santa Clara, California.

SITEMAP

Copyright © 2018 CGTN. Beijing ICP prepared NO.16065310-3

Copyright © 2018 CGTN. Beijing ICP prepared NO.16065310-3