Tech & Sci

14:56, 09-Sep-2017

AI facial recognition can infer if a man is gay or straight

By Wang Xueying

Can a computer identify a person’s sexual orientation? The answer may be "Yes".

By analyzing photos of people’s faces, artificial intelligence has a far superior “gaydar” compared to humans, said Yilun Wang and Michal Kosinski from Stanford University in their latest study.

Published by the Journal of Personality and Social Psychology, the study shows that AI facial recognition has the ability to accurately infer gay and heterosexual men from pictures of people.

By “watching” 130741 images from volunteers who have already explain their sexual orientation, AI machines could distinguish between gay and “straight” men, whose accuracy could achieve 81 percent.

Composite images of gay and straight subjects /The Telegraph Photo

Composite images of gay and straight subjects /The Telegraph Photo

Compared to the accuracy of 61 percent for a human, machines can better identify a person’s sexual orientation, especially when they are given five images of a person's face, rather than one image. The AI success rate could reach 91 percent of the time with men and 83 percent of the time with women, the study shows.

The findings can be considered as a strong support for the theory that a person's sexual orientation will be affected by the exposure to various hormones before birth. It is conducive to improve the understanding of the human cognition.

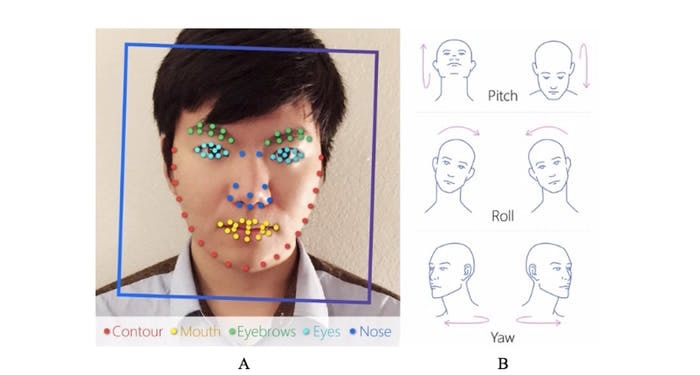

Deep neural networks were used to extract features. /The Telegraph Photo

Deep neural networks were used to extract features. /The Telegraph Photo

However, Yilun Wang and Michal Kosinski warned some potential dangers of the study as well.

“Given that companies and governments are increasingly using computer vision algorithms to detect people's intimate traits, our findings expose a threat to the privacy and safety of gay men and women,” they pointed out in the study. In their opinions, the LGBT rights, especially in some relatively conservative countries, may be threatened when AI facial recognition technology is misused.

SITEMAP

Copyright © 2018 CGTN. Beijing ICP prepared NO.16065310-3

Copyright © 2018 CGTN. Beijing ICP prepared NO.16065310-3