Editor's note: This is the 60th article in the COVID-19 Global Roundup series. Here is the previous one.

A screen shot from the video titled 'Plandemic: The Hidden Agenda Behind COVID-19'.

A screen shot from the video titled 'Plandemic: The Hidden Agenda Behind COVID-19'.

Recently, a conspiracy theory-laden, documentary-style video titled "Plandemic: The Hidden Agenda Behind COVID-19" took on a life of its own even after the original one was taken down.

The video, lasting for more than 20 minutes and viewed more than tens of millions of times, sheds light on a string of questionable, false and potentially dangerous coronavirus theories that the pandemic was planned, the coronavirus was manufactured in a lab, that it's injected into people via flu vaccinations and that wearing a mask could likely make one sick.

It also attacks the record of Dr. Anthony Fauci, the director of the National Institute of Allergy and Infectious Diseases and promotes unproven treatments.

The "Plandemic" video has been removed from big social media platforms, but small clips are still available on YouTube and full versions of it are still can be seen on some fringe websites with lax regulations.

This is just one typical example of tens of thousands pieces of misinformation on social media which have become incubation ground and megaphones for the creation and broadcast of misinformation and conspiracy theories amid the COVID-19 pandemic.

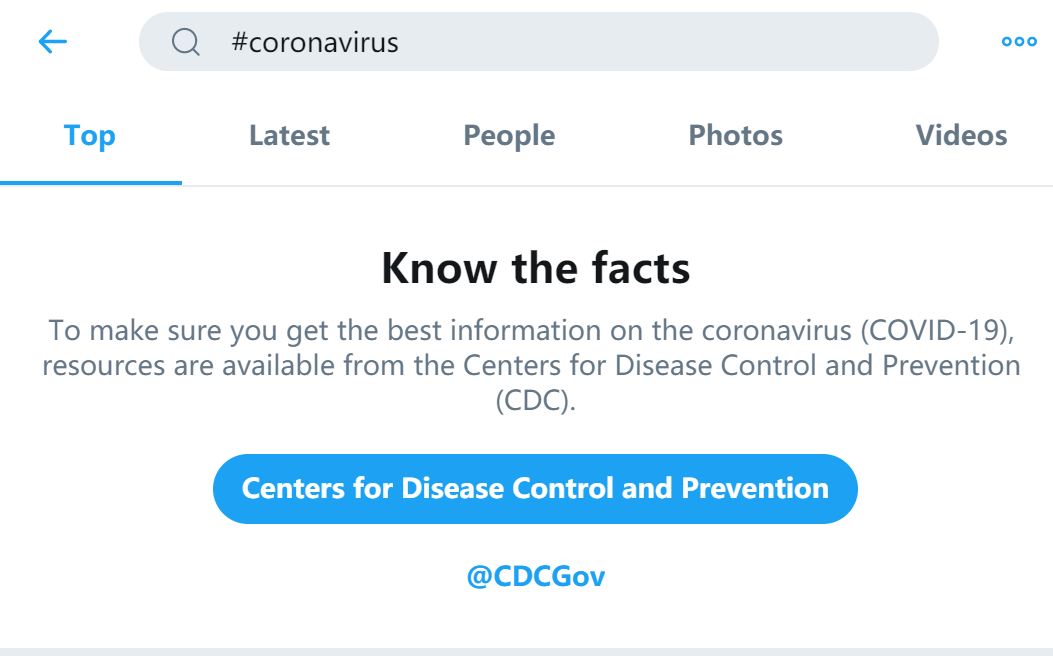

A screen shot shows this when one searches the hashtag 'coronavirus' on Twitter.

A screen shot shows this when one searches the hashtag 'coronavirus' on Twitter.

Testing big tech

Big tech companies, such as Twitter, Facebook and YouTube are racing to take down or issue warning labels to harmful, dubious and misleading content related to the pandemic.

Facebook on Tuesday announced that it had put warning labels on about 50 million pieces of content related to COVID-19, after taking the unusually aggressive step of banning harmful misinformation about the new coronavirus at the start of the pandemic.

Last month, it announced that it would retroactively provide links to verified COVID-19 information to users who had previously "Liked," reacted, or commented on "harmful misinformation" related to the pandemic.

The proactive social media company has also removed about 4.7 million posts connected to hate organizations on its flagship app in the first quarter, up from 1.6 million in the 2019 fourth quarter. It deleted 9.6 million posts containing hate speech, compared with 5.7 million in the prior period.

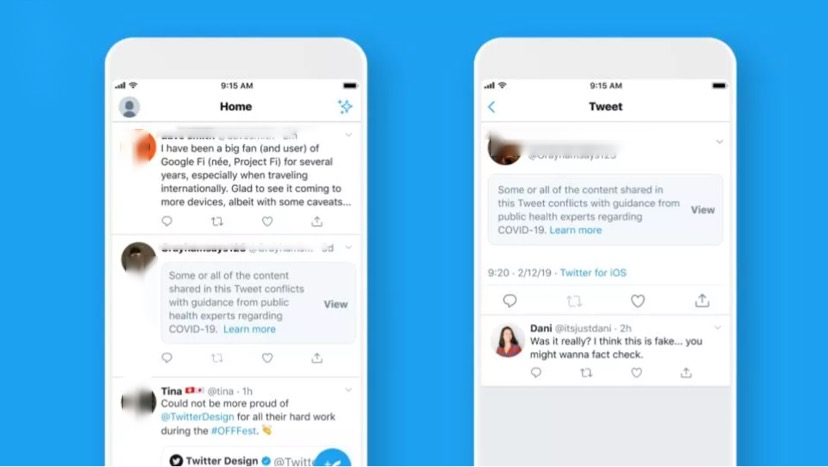

Twitter, mirroring Facebook, recently announced its new initiative to help tackle misinformation surrounding the COVID-19 pandemic. The company removed tweets that run the risk of causing harm by spreading dangerous misinformation about COVID-19 and issued labels and warning messages to tweets with "disputed or misleading information related to COVID-19."

Removal is the most serious consequence and a warning is next-most serious. Content with disputed information that Twitter thinks carries a severe propensity for harm will get one of these warning messages. And for tweets deemed moderately harmful, they will receive an exclamation mark underneath the tweet with a link directing users to reliable information.

Twitter and Facebook now both have the function involving third party fact-checkers. Twitter, unlike Facebook which encourages users to actively report misinformation, has long been described itself as the "free speech wing of the free speech party" and has no policies for flagging or removing false tweets, now open a window for reporting.

Once an account holder sees misinformation and reports it to Twitter, the company will "assess it regardless under its new expanded rules." While, for users who see a false post on Facebook, they can flag it and once the post is flagged, other users who are viewing it will be warned and directed to content from a professional fact-checking organization.

Twitter blocked out a piece of misleading information that goes against the guidance of public health authorities.

Twitter blocked out a piece of misleading information that goes against the guidance of public health authorities.

Why do people fall for misinformation?

The harmful results of the spread of misinformation in the case of the pandemic are plain to see. Aside from inciting hatred and anti-Asian mistreatment, misinformation has also fueled distrust in health authorities, led to anti-vaccine sentiment and an increased spread of the coronavirus due to the abandoning of protective social norms like physical distancing and wearing masks when go to public places.

However, going back to the viral "Plandemic" video, the fact that though misinformation pose threat to public health, the 26-minute video was still shared by tens of thousands across multiple social media platforms triggers one question: why do people fall for misinformation?

There is no specific answer to the question, but viewing it from a psychological perspective, there are two prominent claims to the answer.

One group claims that our ability to reason is hijacked by our partisan convictions. That is people tend to trust the information that resonates with their already formed opinions (Rationalization camp). The other group claims that the problem is that we're mentally lazy to think and often fail to exercise our critical thinking faculties.

In recent years, the rationalization camp has gained considerable prominence. It contends that when it comes to politically charged issues, people use their intellectual abilities to persuade themselves to believe what they want to be true rather than attempting to actually discover the truth.

For example, about the origins of the coronavirus. Though many scientists have stated that it's naturally evolved and not man-made in a laboratory, some members of the highest levels of government still share the misinformation online, making the fringe theories more mainstream and helping validate conspiratorial claims, which has blurred the line between what is real and what is not.

A Facebook logo is displayed on a smartphone in this illustration taken January 6, 2020. /Reuters

A Facebook logo is displayed on a smartphone in this illustration taken January 6, 2020. /Reuters

And in the process, social media has played a key role by allowing users to curate their own information environment and choose what they would like to see, which can lead to filtered realities. Put another way, on social media, people are able to expose themselves only to information that is either appealing to them or information that they want to be true.

While, another group of experts contend the problem is lazy thinking and it's natural for our brains to take mental shortcuts to ensure our survival, which is especially true in the context of a global pandemic.

Experts interviewed by a Canadian news website say people are predisposed to believing in things that are not true, mainly because we are incapable of knowing the truth about everything at a time when government and health authorities across the world are constantly adjusting their responses to the pandemic, introducing new policies to prevent it from spreading.

They explained that the time full of constant changes create a sense of insecurity and anxiety among people who become more desperately to seek information that can help them make sense of what's going on, but are easy to accept.

"Conspiracy theory often is more psychologically pleasing and convenient (than reality). It makes simpler sense than a complex phenomenon," David Black, a communications theorist at Royal Roads University in British Columbia told the Canadian news website.

"Its primal simplicity cuts through the noise and confusion and uncertainty that is the world on a normal day, but especially during a pandemic.”